Let’s start from the beginning: discover the power of ChatGPT to create your own personalized solution with no-code tools. Find out what you need to start creating efficient scenarios!

Artificial intelligence has transformed the way we interact with technology, and ChatGPT is a prime example. Developed by OpenAI, this advanced language model allows for the creation of interactive and dynamic applications, and even without programming knowledge, it’s possible to take full advantage of it, thanks to no-code tools. Some possibilities include:

- Customer service: responding to common customer questions and queries, speeding up service and improving user experience.

- Bookings and appointments: facilitating the process of bookings and appointments, whether for restaurants, medical consultations, or general services.

- Research and feedback: collecting user opinions and feedback on products, services, or experiences, helping to improve and adapt to the target audience’s needs.

In this article, we’ll discover what we need to do to start building some scenarios using the Make platform to integrate ChatGPT efficiently and customizably. If you’re not familiar with Make, I suggest you take a look at the Make for Beginners article before reading this one, and if you don’t have a Make account yet, I suggest you create one. Among the no-code tools, it’s one of the ones I use the most, because it offers A LOT of flexibility in operations and flows. So, shall we start?

Step 1: Activate your developer access in OpenAI

The OpenAI Platform is the official platform of OpenAI, where developers and companies can access and use the services and APIs provided by OpenAI. The platform offers a user-friendly interface and tools to interact with OpenAI’s technologies, such as the GPT model, Dall-E, among others.

Through this platform, users can create and manage API keys, access relevant documentation, experiment with the API interactively, and monitor resource usage and limits. It’s a centralized place to learn about and use OpenAI’s services in artificial intelligence projects and applications.

What is an API?

An API, or Application Programming Interface, is a way for one software to communicate with another software. Imagine it as a waiter in a restaurant: you, as the customer, place your order (request) with the waiter (API), who takes your order to the kitchen (other software). The kitchen prepares your food (processes the request) and the waiter (API) brings the ready dish (response) back to you.

In technological terms, the API allows different programs to share information and resources with each other in an easy and organized way, without users needing to understand the technical details of how this happens. For example, in weather forecast apps, the API provides real-time weather data for the app to display to the user. It’s a way to simplify communication and collaboration between different software to create richer and more integrated experiences.

How to activate my developer access?

If you already have an account to access ChatGPT, ou need to create a new account for the OpenAI Platform, as the two systems are different and accounts from one platform are not transferable to the other. To create your account on the OpenAI Platform, follow these steps:

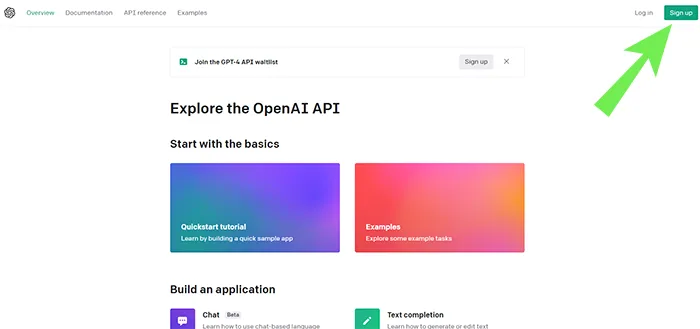

1 ▪️ Visit the OpenAI Platform

2 ▪️ Click on the “Sign Up” button.

3 ▪️ Enter your email address and create a secure password.

4 ▪️ Click on the “Create Account” button.

5 ▪️ You can choose to create an organization or keep your personal account just with your name, if you prefer.

6 ▪️ Verify your email address and phone, following OpenAI’s guidelines.

7 ▪️ After verifying your email address, log in to your newly created account.

8 ▪️ Once your account is created, you will find the user menu in the upper right corner, where the Log In and Sign Up buttons used to be.

Key concepts for using the OpenAI API

The OpenAI API can be applied to practically any task involving understanding or generating natural language, code, or images. Below, you’ll have some key concepts you need to know before you start exploring this world:

Prompts

It’s the instruction text you provide to the model to generate the result. In other words, it’s the question you ask. Some examples of questions (prompts):

- Content generation: “Write an article about…”

- Summary: “Please, summarize the following text…”

- Translation: “Please, translate the following text from Portuguese to English: …”

- Questions: “What is the capital of France?”

- Sentiment analysis: “Determine the sentiment of the following sentence: ‘…’ Positive or negative?”

Tokens

Tokens are the basic units of text used by the OpenAI platform to process and generate language. A token can be a character or a word, depending on the language and complexity of the text. The platform breaks the text into tokens to analyze and create responses, and costs are defined according to the number of tokens used.

Models

Models are artificial intelligence structures created by OpenAI to process and generate language. They are trained with large amounts of data and learn to understand and produce text in different contexts.

There are several models available on the OpenAI platform, each with different levels of capability and suitable for different tasks. When using the platform, you can choose the most appropriate model for your goal, thus ensuring better results and efficiency in language processing.

Open AI has various models, including GPT4, which as of today is available very limitedly. To work in Make, today there are 4 main models that are part of GPT3:

- davinci: is the most advanced and powerful model, available in GPT-3 and GPT-3.5. It is useful for complex tasks that require a high level of understanding, such as creative writing, problem-solving, and text analysis. However, it is also the slowest and most computationally expensive model among the variants.

- curie: offers a good balance between performance and cost, being suitable for a wide range of natural language processing tasks, such as summarizing texts, answering questions, and generating content in general.

- babbage: The babbage variant is smaller than curie and can be a more economical and faster option for less complex tasks. It is useful in situations that do not require maximum precision, such as sentiment classification, text analysis, and other natural language processing tasks.

- ada: The ada model is the smallest and fastest among the GPT-3 variants. It is ideal for simpler and less computationally expensive tasks, such as basic content generation, keyword analysis, and tasks that do not require a high level of precision or complexity.

Step 2: Creating my scenario with OpenAI in Make

Creating scenarios using the Make platform in conjunction with OpenAI’s services has gained popularity due to the ease and efficiency they provide. No-code solutions and artificial intelligences combine to allow for the construction of personalized projects, even without programming knowledge. In this context, we’ll learn how to take advantage of these tools to develop scenarios that meet your specific needs in a practical and innovative way.

What do I need to start configuring Make with OpenAI?

To start creating scenarios in Make using OpenAI’s services, you’ll need two essential items from OpenAI:

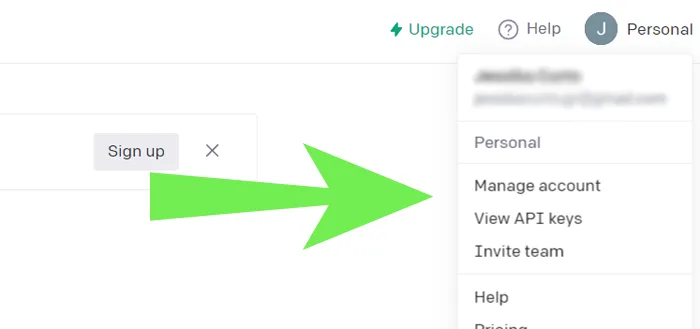

Organization ID

is the unique identifier of your organization within the OpenAI platform. It allows you to manage and access your organization’s specific resources.

With your account logged in, you can find this data here (or in Manage Account > Settings).

API Key

is an access key that allows authentication and secure communication between Make and the OpenAI API. With this key, you can make requests to the API to use OpenAI’s language models in your scenarios. Creating it is very easy:

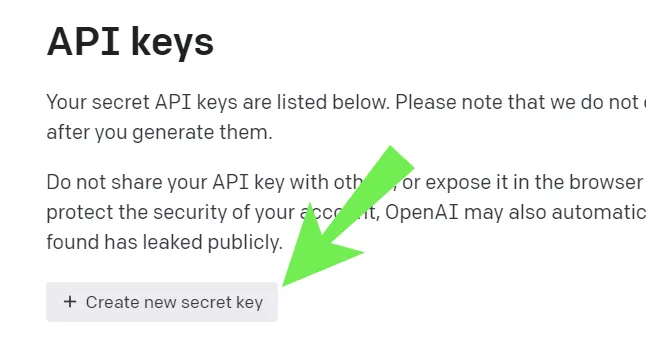

1 ▪️ Visit the API Keys (or click on your name in the upper right corner, then View API Keys)

2 ▪️ Click on Create new secret key

Done, your API Key is created. It’s a bunch of letters and numbers that start with “sk-…” ATTENTION: copy the generated key and paste it into some record you can retrieve (for example, in the notepad). Once you close the window, it will be encrypted and you will never be able to see the created key again.

Configuring Make with OpenAI

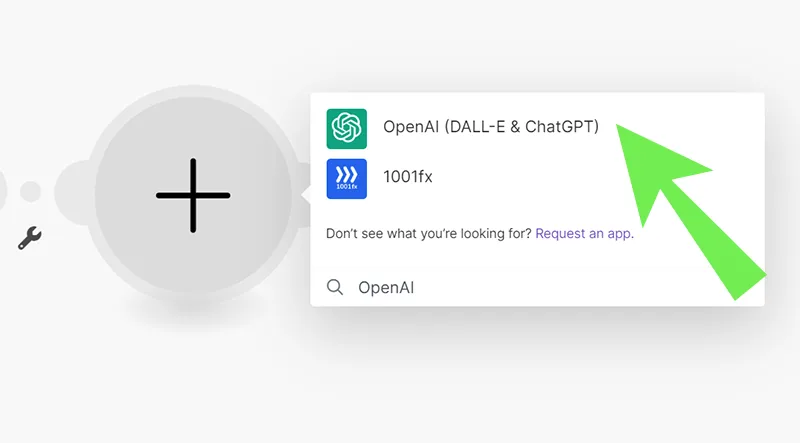

1 ▪️ In your desired scenario of Make, add a new module and search for OpenAI

2 ▪️ Select the OpenAI option (DALL-E & ChatGPT)

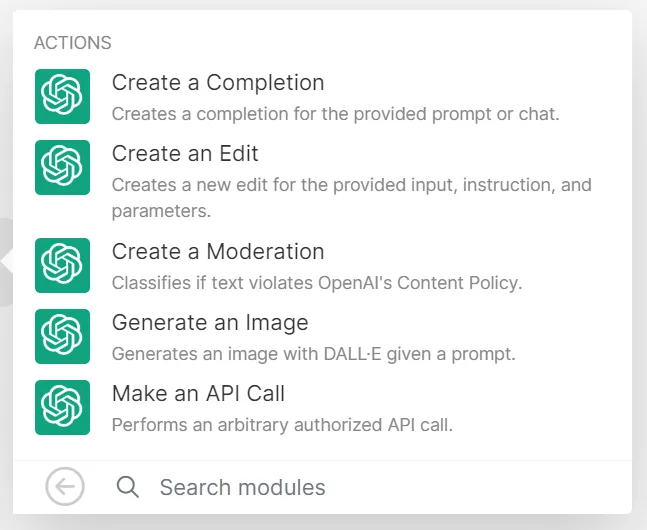

3 ▪️ Select the module you need.

- Create a completion: to generate texts

- Create an Edit: allows you to edit and improve existing text, provide suggestions and corrections of grammar, spelling, and style for the text being edited (has a Create a Completion before)

- Create a moderation: checks if the text violates OpenAI’s content policies,

- Generate an Image: generates an image with DALL-E.

- Make an API Call: makes an API call different from the ones mentioned above.

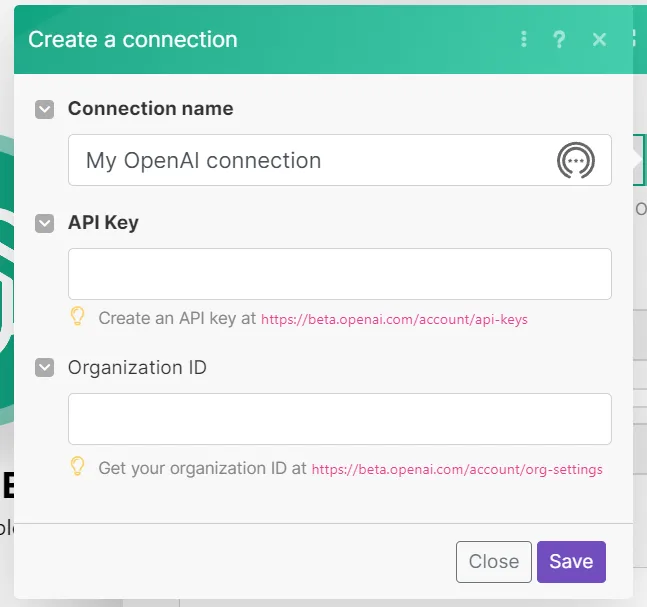

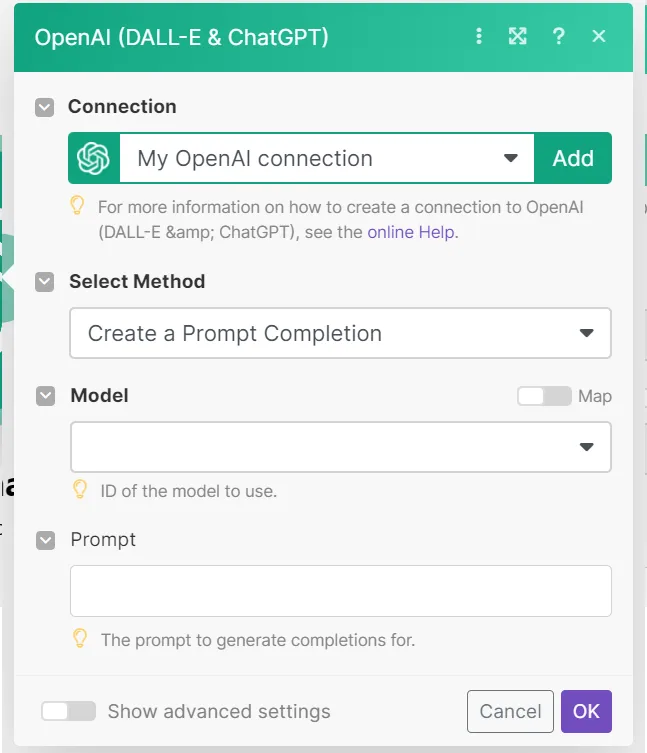

For this example, we select Create a Completion. You need to configure your API connection.

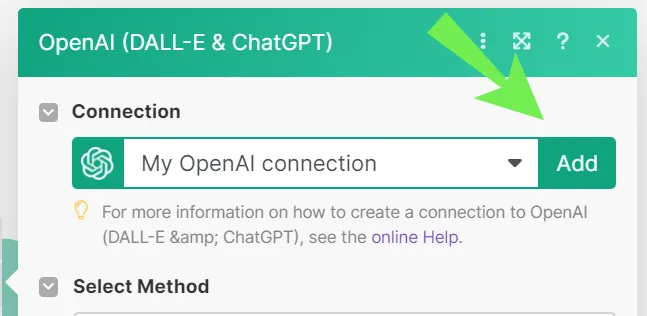

4 ▪️ Click on “Add”

8 ▪️ Done, you are connected! Now you just need to configure the call for what you need.

OpenAI is constantly updating, the contents and configuration possibilities may vary a bit from this explanation. Every time I enter there is something different… But based on what is available today:

- Select Method: if you want to generate a text (Prompt) or a chat response (Chat)

- Model: the model you want to work with. This is the part that changes the most, but as of today, the models below and their usage variations (text for text) are available. For more information on the models, look here.

- Prompt: the instruction for content generation.

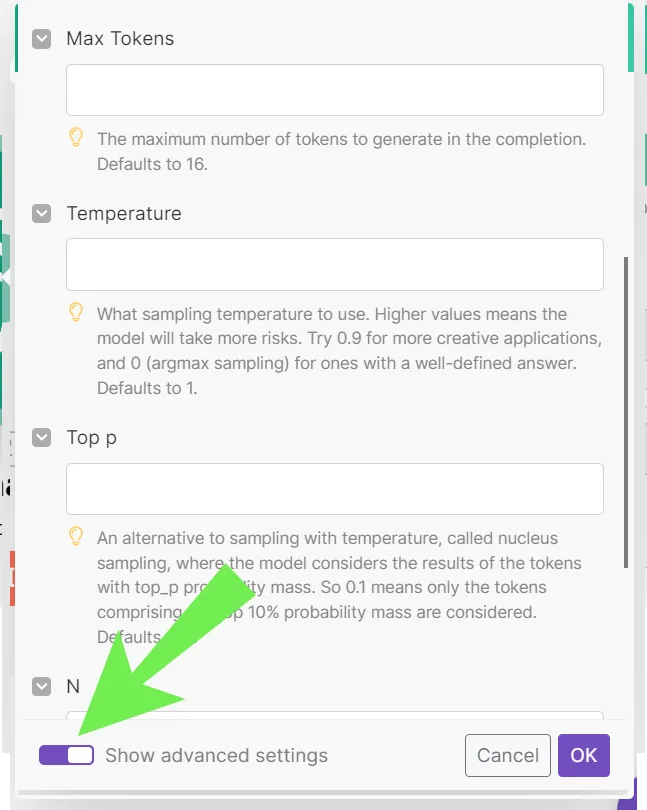

9 ▪️ In the lower left corner, select Show Advanced settings.

Important to change:

- Max Tokens: if you want to generate more complex responses, I suggest you increase the number of tokens. If you leave this field blank, the maximum value will be 16 tokens (which are between 16 letters and 16 words). Put the number of tokens that is enough to generate the type of content you want. For example: for larger texts, with more paragraphs, I leave it between 1500-1800 tokens. Keep in mind that the tokens used in the question count.

Optional (more complex):

These parameters I recommend you use gradually to adjust and fine-tune the responses. It’s trial and error, because each prompt and goal may need different adjustments.

- Temperature: a parameter used to control the creativity or randomness of the results. It determines how likely it is that the API will choose a rarer or more unusual word or phrase to complete a task. A higher temperature value will result in more creative and random responses. In general, higher temperature values are used for creative tasks, such as generating product names, while lower values are preferred for more technical tasks, such as text prediction.

- Top P: controls the diversity of the text. For example, if Top P is set to 0.9, the API will choose from the 90% most probable tokens. This helps control the diversity of the text generated by the API.

- N: How many responses do you need for the same question? The standard is 1.

- Echo (YES/NO): makes the response generated by the model repeated or echoed at the end of the generation. This can be useful in certain situations, such as when creating a response to a specific question and you want to emphasize or reaffirm the generated response.

- Other Input Parameters: can be used to further customize text generation and include options like “frequency_penalty” (frequency of words used in the generated output), “presence_penalty” (frequency of tokens in the input that should appear in the output) and “stop” (a list of tokens that, when found in the generated output, will cause the text generation to stop). Including these parameters can help adjust the output generated by the model to meet the specific needs of the user.

10 ▪️ DONE! Your module is ready to be used.

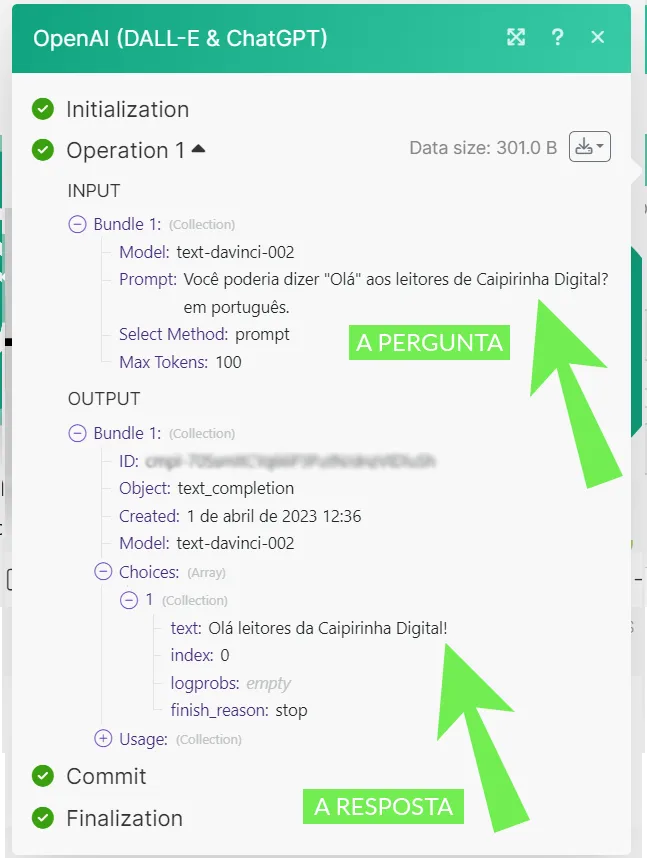

To exemplify, I created a simple prompt “Could you say “Hello” to the readers of Caipirinha Digital? in Portuguese”. I chose the text-davinci-002 model and limited it to a maximum of 100 tokens.

The chat returned “Olá leitores da Caipirinha Digital!”.

If you click on the + of Usage, you can still see how many tokens were used, which were 14 for response and 29 for question (43 total).

The prompts can be as varied as you need. They can include dynamic elements from previous modules and formulas, for example.

How much does it cost to use the OpenAI API?

When using the OpenAI API, it’s important to be aware that costs can vary depending on the chosen plan and specific use. There are different plans available that adjust to each user’s needs. Here are some information about the plans and associated costs:

- Free Plan (Free Trial): This plan is offered as a free trial and usually has a set usage limit, such as a specific amount of tokens or usage time. It’s ideal for those who are starting to explore the API and want to get an idea of how it works before committing to a paid plan. OpenAI gives you USD$5 to explore and experiment with the tool (believe me, it’s a lot!)

- Pay-as-you-go Plan: This plan allows you to pay only for the resources you actually use. The cost is based on the number of tokens used, meaning the more tokens you use in your requests to the API, the higher the cost. This plan is useful for those who have variable or uncertain use of the API and want to pay only for what they actually use.

- Monthly or annual plans: These plans are suitable for users with more consistent and predictable API usage needs. They typically offer a fixed amount of tokens for a monthly or annual price, which can result in savings compared to the pay-as-you-go plan.

It’s also important to consider that, in addition to the direct costs associated with using the API, there may be additional costs related to infrastructure and development required to integrate the API into your project or application.

After understanding the basic concepts about the OpenAI API and the available models, it’s important to address the cost of using this powerful tool. The price of using the OpenAI API is determined by the number of tokens processed during interactions with the models.

As I mentioned, OpenAI is evolving very quickly, and with it, the token pricing policy also changes rapidly. I suggest taking a look at the Pricing page for updated information. It’s important to note that the prices on the page are for a THOUSAND (1,000) tokens. Basic queries are included in the credits of the free plan, and you can test a lot!

In conclusion, integrating Make with the OpenAI ChatGPT API offers an easy and efficient way to leverage natural language processing capabilities in your projects, without the need for complex coding. By using Make and ChatGPT, you can create personalized scenarios and solutions, from virtual assistants to content generation systems.

It’s important to remember to consider the costs associated with using the OpenAI API and to manage token consumption efficiently to ensure economical use of the available tools. By following the steps and tips addressed in this article, you will be well prepared to start exploring the full potential of the OpenAI API and create innovative solutions with the Make platform.

With the combination of these two technologies, the possibilities are virtually endless, and you can further expand the boundaries of what is possible to achieve in the field of no-code and artificial intelligence.